Apple has announced a new system to be included in iPhones that will automatically scan those devices to identify if they contain any media featuring child sexual abuse.

It is part of a range of child protection features launching later this year in the US through updates to iOS 15 and iPadOS and will compare the images on users' devices to a database of known abuse images

If a match is found, Apple says it will report the incident to the US National Centre for Missing and Exploited Children (NCMEC). It is not clear which other national authorities the company will contact outside of the US, nor whether the features will be available outside of the US.

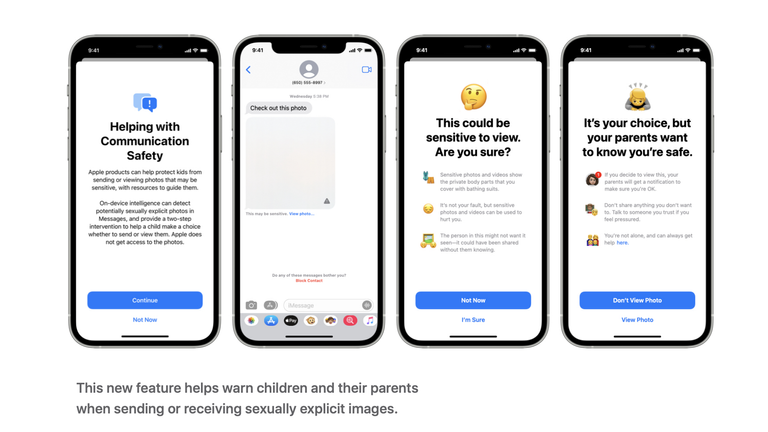

Among the other features announced by Apple are a move to scan end-to-end encrypted messages on behalf of parents to identify when a child receives or sends a sexually explicit photo, offering them "helpful resources" and reassuring the children that "it is okay if they do not want to view this photo".

The announcement has provoked immediate concern from respected computer scientists, including Ross Anderson, professor of engineering at the University of Cambridge, and Matthew Green, associate professor of cryptography at Johns Hopkins University.

Professor Anderson described the idea as "absolutely appalling" to the Financial Times, warning "it is going to lead to distributed bulk surveillance of... our phones and laptops".

Dr Green - who announced the new programme before Apple made a statement on it - warned on Twitter: "Regardless of what Apple's long term plans are, they've sent a very clear signal.

"In their (very influential) opinion, it is safe to build systems that scan users' phones for prohibited content. Whether they turn out to be right or wrong on that point hardly matters."

"This will break the dam - governments will demand it from everyone. And by the time we find out it was a mistake, it will be way too late," Dr Green added.

The criticism has not been universal. John Clark, the president and chief executive of NCMEC, said: "We know this crime can only be combated if we are steadfast in our dedication to protecting children. We can only do this because technology partners, like Apple, step up and make their dedication known."

The former Home Secretary Sajid Javid tweeted that he was "delighted to see Apple taking meaningful action to tackle child sexual abuse".

Others who have praised it include Professor Mihi Bellare, a computer scientist at the University of California, San Diego, Stephen Balkam, the chief executive of the Family Online Safety Institute, and former US attorney general Eric Holder.

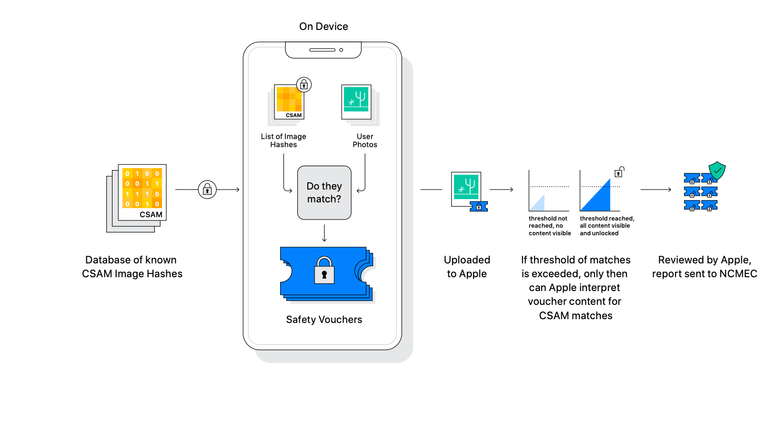

Apple stressed to Sky News that the detection system is built with users' privacy in mind and so that it can only work to identify abuse images, such as those collected by NCMEC, and will do so on the users' device - before the image is uploaded to iCloud.

However, Dr Green warned that the way the system worked - downloading a list of fingerprints produced by NCMEC that corresponded to its database of abuse images - introduced new security risks for users.

"Whoever controls this list can search for whatever content they want on your phone, and you don't really have any way to know what's on that list because it's invisible to you (and just a bunch of opaque numbers, even if you hack into your phone to get the list)."

"The theory is that you will trust Apple to only include really bad images. Say, images curated by the NCMEC. You'd better trust them, because trust is all you have," Dr Green added.

Explaining the technology, Apple said: "Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organisations."

The fingerprinting system Apple is using is called NeuralHash. It is a perceptual hashing function which creates fingerprints for images in a different way to traditional cryptographic hashing functions.

Perceptual hashing is also believed to be used by Facebook in its programme that stores users' private sexual pictures in order to prevent that material being seen by strangers.

These hashing algorithms are designed to be able to identify the same image even if it has been modified or altered, something which cryptographic hashes do not account for.

The images here using the MD5 cryptographic hashing algorithm and the pHash perceptual hashing algorithm demonstrate this.

In a statement, Apple said: "At Apple, our goal is to create technology that empowers people and enriches their lives - while helping them stay safe.

"We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of child sexual abuse material."

"This programme is ambitious and protecting children is an important responsibility. These efforts will evolve and expand over time," Apple added.

https://news.google.com/__i/rss/rd/articles/CBMiU2h0dHBzOi8vbmV3cy5za3kuY29tL3N0b3J5L2FwcGxlLXRvLXNjYW4taXBob25lcy1mb3ItaW1hZ2VzLW9mLWNoaWxkLWFidXNlLTEyMzc0MDky0gFXaHR0cHM6Ly9uZXdzLnNreS5jb20vc3RvcnkvYW1wL2FwcGxlLXRvLXNjYW4taXBob25lcy1mb3ItaW1hZ2VzLW9mLWNoaWxkLWFidXNlLTEyMzc0MDky?oc=5

2021-08-06 11:26:15Z

52781776799212

Tidak ada komentar:

Posting Komentar