After BBC reporter Ellie House came out as gay, she realised that Netflix already seemed to know. How did that happen?

I realised that I was bisexual in my second year of university, but Big Tech seemed to have worked it out several months before me.

I'd had one long-term boyfriend before then, and always considered myself straight. To be honest, dating wasn't at the top of my agenda.

However, at that time I was watching a lot of Netflix and I was getting more and more recommendations for series with lesbian storylines, or bi characters.

These were TV series that my friends - people of a similar age, with a similar background, and similar streaming histories - were not being recommended, and had never heard of.

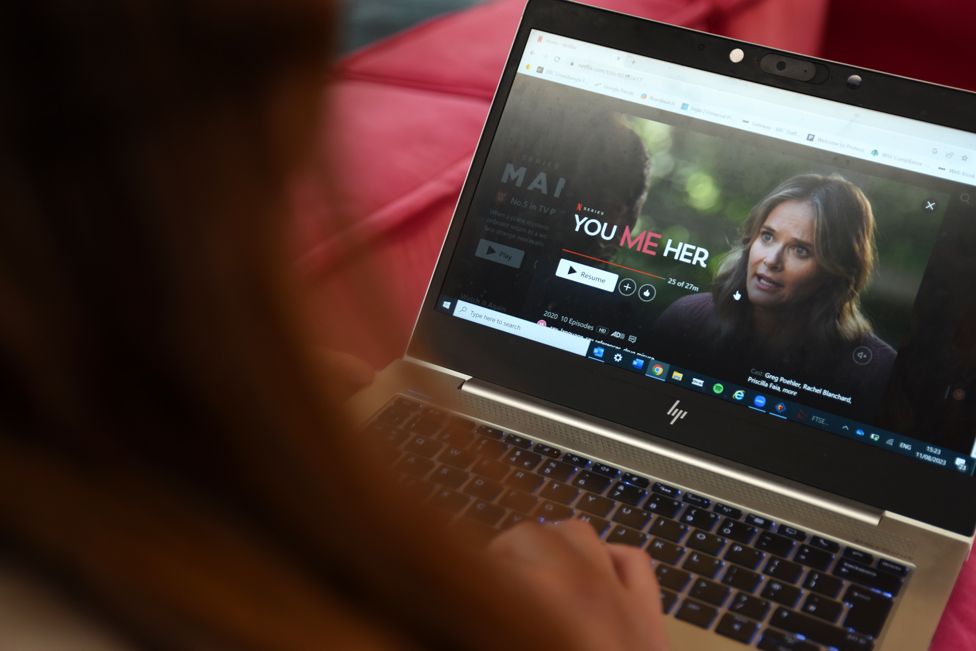

One show that stuck out was called You Me Her, about a suburban married couple who welcome a third person into their relationship. Full of queer storylines and bi characters, it has been described as TV's "first polyromantic comedy".

It wasn't just Netflix. Soon, I had spotted similar recommendations on several platforms. Spotify suggested a playlist it described as "sapphic" - a word to describe women who love women.

After a couple of months on TikTok, I started seeing videos on my feed from bisexual creators.

A few months later, I came to the separate realisation that I myself was bisexual.

What signs had these tech platforms read that I myself hadn't noticed?

User, meet content

Netflix has 222 million users globally and thousands of films and series available to stream across endless genres. But any individual user will only stream on average six genres a month.

In order to show the content it thinks people want to watch, Netflix uses a powerful recommendation system. This network of algorithms helps to decide which videos, images, and trailers populate a user's home page.

For instance, You Me Her is tagged with the genre code '100010' - or "LGBTQ+ Stories" to a human eye.

The goal of a recommender system is to marry the person using the platform with the content.

This digital matchmaker takes in information on both sides, and draws connections. Things like the genre of a song, what themes are explored in a film, or which actors are in a TV show can all be tagged. Based on this, the algorithm will predict who is most likely to engage with what.

"Big data is this vast mountain," says former Netflix executive Todd Yellin in a video for the website Future of StoryTelling. "By sophisticated machine-learning techniques, we try to figure out - what are the tags that matter?"

But what do these platforms know about their users - and how do they find it out?

Under UK data privacy laws, individuals have a right to find out what data is being held about them by an organisation. Many of the streaming and social media companies have created an automated system for users to request this information.

I downloaded all of my information from eight of the biggest platforms. Facebook had been keeping track of other websites I'd visited, including a language-learning tool and hotel listings sites. It also had the coordinates to my home address, in a folder titled "location".

Instagram had a list of more than 300 different topics it thought I was interested in, which it used for personalised advertising.

Netflix sent me a spreadsheet which detailed every trailer and programme I had watched, when, on what device, and whether it had auto-played or whether I'd selected it.

There was no evidence that any of these platforms had tagged anything to do with my sexuality. In a statement to the BBC, Spotify said: "Our privacy policy outlines the data Spotify collects about its users, which does not include sexual orientation. In addition, our algorithms don't make predictions about sexual orientation based on a user's listening preferences."

Other platforms have similar policies. Netflix told me that what a user has watched and how they've interacted with the app is a better indication of their tastes than demographic data, such as age or gender.

How you watch, not what you watch

"No one is explicitly telling Netflix that they're gay," says Greg Serapio-Garcia, a PhD student at the University of Cambridge specialising in computational social psychology. But the platform can look at users that have liked "queer content".

A user doesn't have to have previously streamed content tagged LGBT+ to receive these suggestions. The recommender systems go deeper than this.

According to Greg, one possibility is that watching certain films and TV shows which are not specifically LGBTQ+ can still help the algorithm predict "your propensity to like queer content".

What someone watches is only part of the equation; often, how someone uses a platform can be more telling.

Listen to Did Big Tech know I was gay before I did?, presented by Ellie House on BBC World Service at 02:32 and 09:32 GMT on 15 August - or download as a podcast on BBC Sounds

Other details can also be used to make predictions about a user - for instance, the percentage of time they stay continuously watching, or whether they go through the credits.

According to Greg, these habits may not really mean anything on their own, but taken together across millions of users, they can be used to make "really specific predictions".

So the Netflix algorithm may have predicted my interest in LGBT+ storylines not merely based on what I had watched in the past. It was also looking at when I clicked on it, and even which device I was watching on and when.

For me, it's a matter of curiosity, but in countries where homosexuality is illegal, Greg thinks that it could potentially put people in danger.

Speaking to LGBT+ people around the world, I've heard conflicted messages. On the one hand, they often love what they're recommended on streaming sites - maybe they even see it as liberating.

But on the other hand, they're worried.

"I feel like it is an intrusion of our privacy," I'm told by one gay man (who we are keeping anonymous for his safety).

"It's giving you a little bit more knowledge of what your life would be if it was free. And that feeling is beautiful and it's good." But, he adds, the algorithms "do really scare me a little bit".

https://news.google.com/rss/articles/CBMiLmh0dHBzOi8vd3d3LmJiYy5jby51ay9uZXdzL3RlY2hub2xvZ3ktNjY0NzI5MzjSATJodHRwczovL3d3dy5iYmMuY28udWsvbmV3cy90ZWNobm9sb2d5LTY2NDcyOTM4LmFtcA?oc=5

2023-08-12 23:25:41Z

2341032144

Tidak ada komentar:

Posting Komentar