Almost exactly a year ago, Google showed us one of the most mind-blowing tech demos in recent times – AR glasses that could instantly translate what someone was saying to the wearer in another language, all in real-time. These Google Glass descendants could give us, as Google claimed, "subtitles for the world".

Unfortunately, as is often the case with Google demos, there was a problem – these AR translation glasses were only "an early prototype" that Google had been testing. The Vaporware Alarm Bells started ringing, and Google has since gone worryingly quiet on the concept.

That's a shame, as Google's AR translation glasses are one of the few hardware ideas of the past few years that promise to solve a big problem, rather than a minor inconvenience. As Google said last year, the technology could break down language barriers, while helping the deaf or hard of hearing to follow conversations.

But all is not lost. We're in the run-up to Google IO 2023, which starts on May 10 and gives Google a chance to tell us how its AR translation glasses are progressing. It's an opportunity that Google needs to take, if we aren't to lose faith in the whole concept actually becoming reality.

We don't need Google to announce a finished consumer product or shipping dates at IO 2023. But if the keynote passes without a mention of its live-translation glasses, we'll have to assume they've been given a First Class ticket to the Google Graveyard (opens in new tab). Fortunately, there are good reasons to think this isn't the case.

Lost in translation

Google's plan to give us the sci-fi dream of live translation tech actually dates back to 2017, when it introduced the original Google Pixel Buds alongside the Pixel 2. Back then, Google said real-time translation would work "like having a personal translator by your side", but reality failed to live up to that promise.

Even six years on, the process of performing live translation with the Google Pixel Buds Pro is a clunky experience, as you can see in the demo below. The promise of Google's AR translation glasses is that they could simplify this, showing you the other person's translated response as text in front of your eyes, rather than interrupting the flow by blasting it into your ear.

We don't yet know if the technological stars have aligned in order to make this happen, but some increasingly hot competition (and Google's own research) appears to be shoving it in the right direction.

Since Google demoed its AR translation glasses, we've seen several companies demo similar concepts. The best so far have arguably been TCL's RayNeo X2 AR glasses, which we had the pleasure of trying at CES 2023.

TechRadar's Managing Editor Matt Bolton called the RayNeo X2s "the most convincing proof of concept for AR glasses that I've seen so far", with the key demo being – you guessed it – live translation and subtitling.

While there was a slight delay of two seconds between a person speaking and their question being translated into text at the bottom of the glasses, Matt Bolton was able to have a whole conversation with someone who was speaking entirely in Chinese. Ideally, both people need to be wearing AR glasses for a full conversation to take place, but it's a good start.

TCL isn't the only company to produce a working prototype of concept glasses similar to what Google showed last year. Oppo's Air Glass 2 managed to trump rivals like the Nreal Air and Viture One by offering a wireless design, which means you don't need a cable to pair the specs with your Oppo smartwatch or phone.

Sadly, the Air Glass 2 are unlikely to launch in western markets, and it's actually more likely that one of the other tech giants could steal Google's live-translation thunder. Cue Meta's screeching left-turn from the metaverse towards its intriguing side-hustle, announced in February 2022 (opens in new tab), to make a 'Universal Speech Translator'.

As the name suggests, this project aims to use machine learning and AI to give everyone "the ability to communicate with anyone in any language", as Mark Zuckerberg claimed. Crucially for Google's AR translation glasses, Meta also promised that "with improved efficiency and a simpler architecture, direct speech-to-speech could unlock near human-quality real-time translation for future devices, like AR glasses".

With Meta apparently planning to launch those AR glasses, currently dubbed Project Nazare, sometime in 2024, the heat is definitely on for Google to get moving with its AR translation specs – and ideally that means announcing some more concrete news at Google IO 2023.

Speech therapy

Will Google actually be in a position to announce a development for its AR Translation glasses at its big developer conference next week? So far, there have been no rumors or leaks to suggest it will, but in recent months it's become clear that Google Translate, and languages in general, remain one of its big priorities.

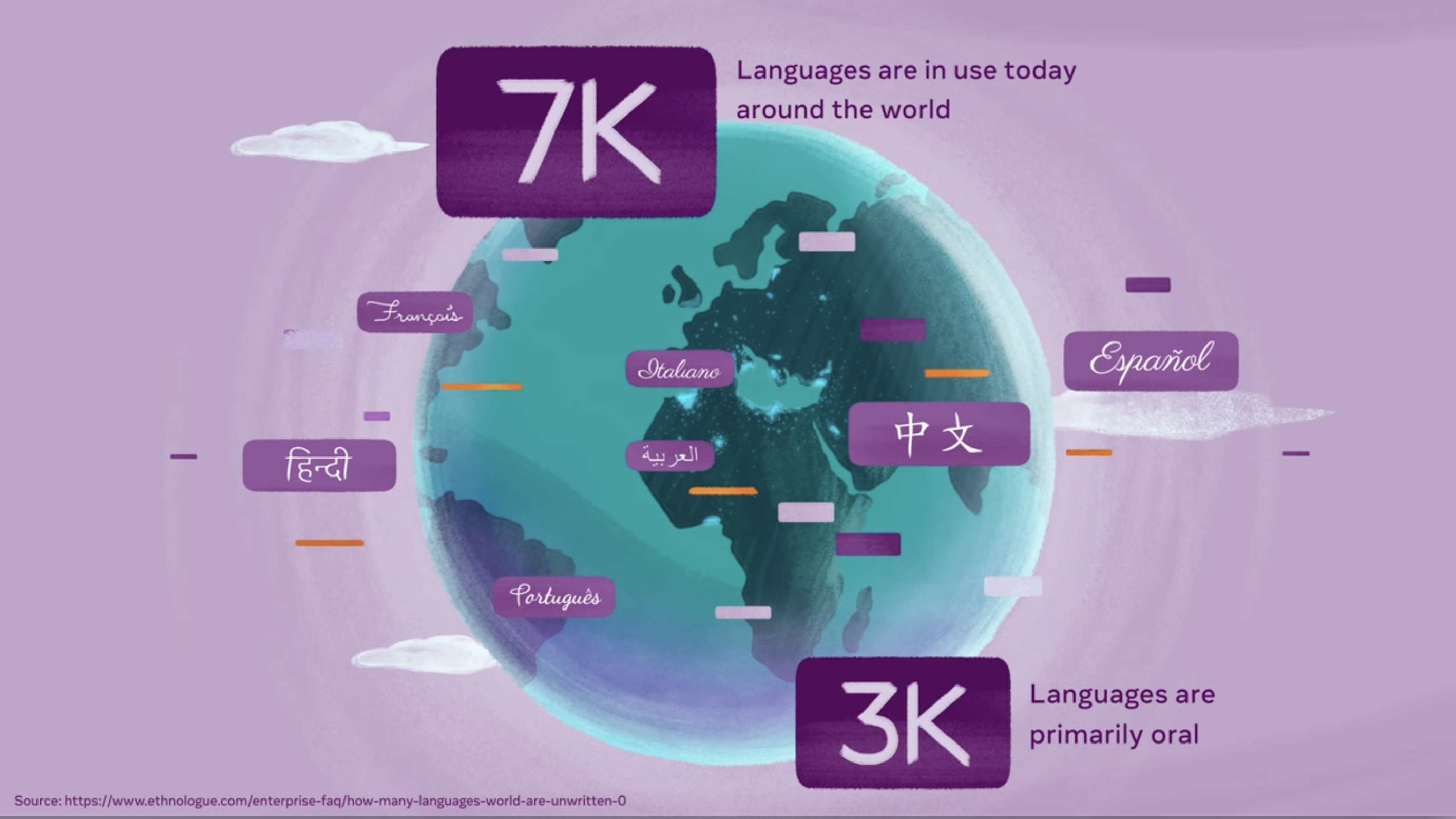

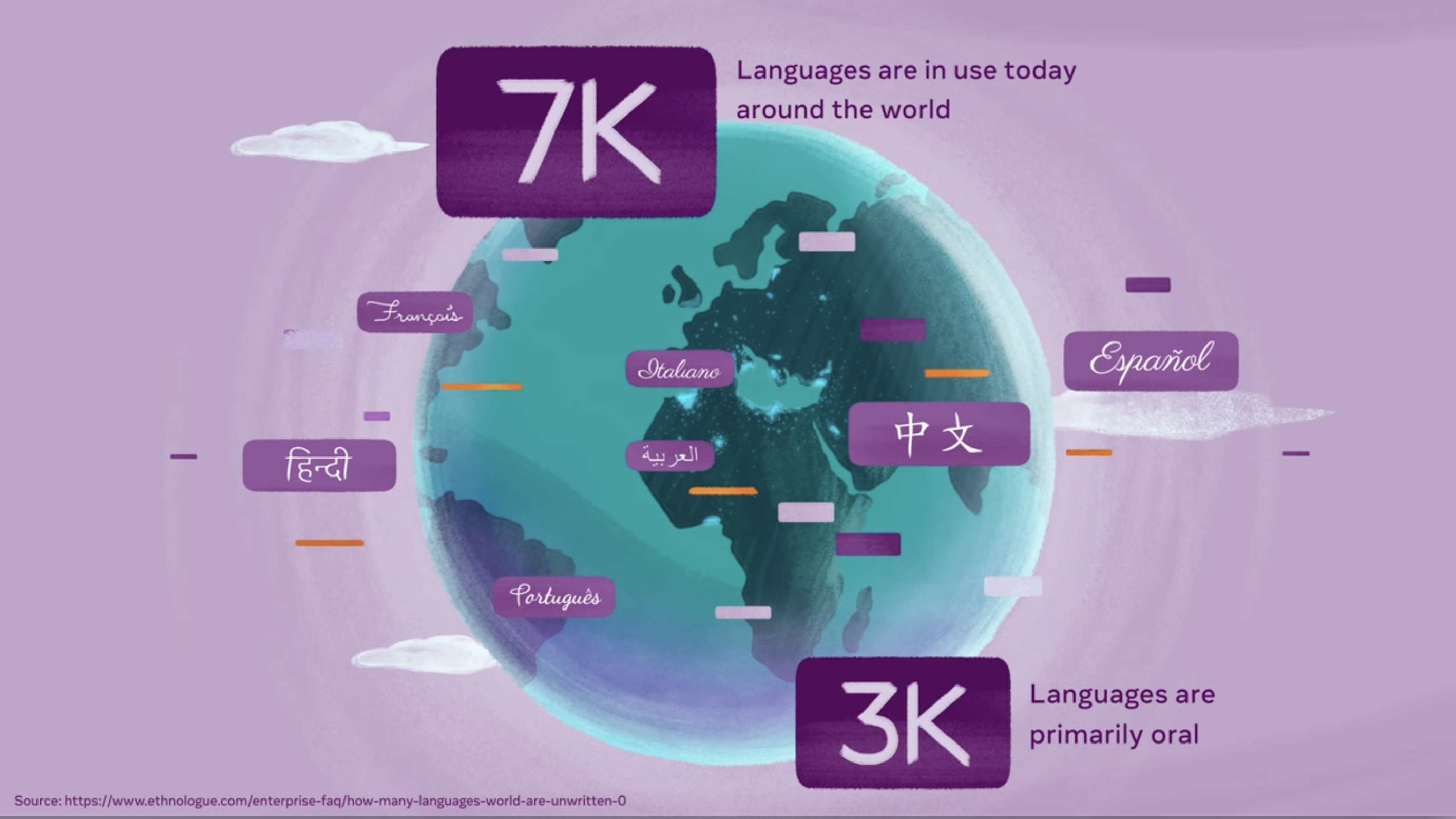

In March, Google's AI research scientists excitedly revealed more information about its Universal Speech Model (USM) (opens in new tab), which is at the heart of its plan to build an AI language model than supports 1,000 different languages.

This USM, which Google describes as a "family of state-of-the-art speech models", is already used in YouTube to generate auto-translated captions in videos in 16 languages (below), allowing YouTubers to grow their global audience.

Google says this AI has been trained on 12 million hours of speech and 28 billion sentences of text, spanning over 300 languages. That's some hefty training data that could hopefully make a significant contribution to AR translation glasses, like the ones that Google demoed a year ago.

Unfortunately, there's been little evidence that Google's recent advances have been making their way into Google Translate. While the company announced last year that it had added 24 languages to Translate – taking it to a total of 133 supported languages – its progress has seemingly plateaued, with rivals like DeepL (opens in new tab) widely considered to be more accurate in certain languages.

Still, Google has made advances elsewhere, revealing in February that Translate will soon become much better at understanding context. It will, for example, be able to understand if you’re talking about ordering a bass (the fish) for dinner or ordering a bass (the instrument) for your band.

Google added that Translate will, for a handful of supported languages, start using “the right turns of phrase, local idioms, or appropriate words depending on your intent", allowing translated sentences to match how a native speaker talks.

All of this again sounds like an ideal foundation for AR translation glasses that work a little more like Star Trek's Universal Translator, and less like the clunky, staccato experiences we've had in the past.

Seeing is believing

Google already has plenty to talk about at Google IO 2023, from the new Google Pixel Fold to Google Bard, and the small matter of Android 14. But it does also feel like a now-or-never moment for its AR translation glasses to take a sizable step towards becoming reality.

This year, Google has already been attacked by the rise of ChatGPT and, next month, it looks likely that we'll see the long-anticipated arrival of the Apple AR/VR headset at WWDC 2023. If it wants to be seen as a hardware innovator, rather than a sloth-like incumbent, it needs to start turning some of its most innovative ideas into real-world objects.

In some ways, Google's AR Translation Glasses feel like the ideal contrast to Apple's mixed-reality headset, which analysts have predicted will throw everything at the wall to see which use-case sticks.

By contrast, a simpler wearable that does one thing well – or at least better than its recently-launched rivals – could be just the boost Google needs during a year when it's seemingly been under attack from all sides. We're looking forward to seeing its response at Google IO 2023 – and will be keeping a close eye on its presenters' spectacles.

https://news.google.com/rss/articles/CBMicGh0dHBzOi8vd3d3LnRlY2hyYWRhci5jb20vZmVhdHVyZXMvZ29vZ2xlLWFyLXRyYW5zbGF0aW9uLWdsYXNzZXMtd2h5LWl0cy1maW5hbGx5LXRpbWUtZm9yLXRoZW0tdG8tYmVjb21lLXJlYWxpdHnSAQA?oc=5

2023-05-06 10:30:00Z

CBMicGh0dHBzOi8vd3d3LnRlY2hyYWRhci5jb20vZmVhdHVyZXMvZ29vZ2xlLWFyLXRyYW5zbGF0aW9uLWdsYXNzZXMtd2h5LWl0cy1maW5hbGx5LXRpbWUtZm9yLXRoZW0tdG8tYmVjb21lLXJlYWxpdHnSAQA

Tidak ada komentar:

Posting Komentar